An Attractive Alternative to Bitcoin

Bitcoin (BTC) is becoming more and more mainstream. Over 50 million in the U.S. now own it. After a long wait for regulatory approval, there

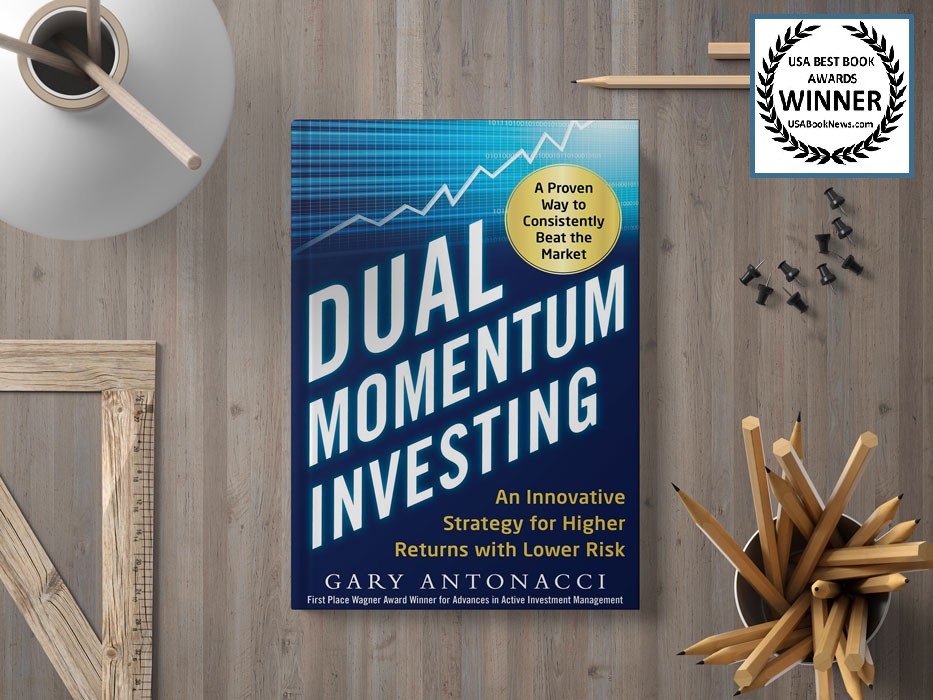

Presenting research and applications of dual momentum investing as discovered

by its founder Gary Antonacci, author of the book Dual Momentum Investing

Gary Antonacci, founder of Optimal Momentum has over 45 years of experience as an investment professional focusing on underexploited investment opportunities.

After receiving his MBA degree from the Harvard Business School, Gary concentrated on researching and developing innovative investment strategies that have their basis in academic research.

His research on momentum investing was the first-place winner in 2012 and the second-place winner in 2011 of the Founders Award for Advances in Active Investment Management given annually by the National Association of Active Investment Managers (NAAIM).

Gary introduced the world to dual momentum which combines relative strength price momentum with trend following absolute momentum.

Momentum is based on the Newtonian notion that a body in motion tends to stay in motion. The classical economist David Ricardo translated momentum into investment terms with the oft quoted phrase, “Cut your losses; let your profits run on.”

Momentum dominated the 1923 book, Reminiscences of a Stock Operator, about the legendary trader Jesse Livermore. Momentum-based velocity ratings were used in the 1920s by HM Gartley and published in 1932 by Robert Rhea. George Seaman and Richard Wyckoff also wrote books in the 1930s that drew upon momentum principles.

Bitcoin (BTC) is becoming more and more mainstream. Over 50 million in the U.S. now own it. After a long wait for regulatory approval, there

I have never been a big fan of the bond market. From 1900 through 2022, the annualized real return of long-term U.S. government bonds was

Tail risk is the probability that an asset performs far below or far above its average past performance. Tail risk is an investor’s worst enemy.

© 2023 Portfolio Management Associates All Rights Reserved